Hello, my name is

Timothy Castiglia, PhD

Machine Learning Researcher

- castigliatim@gmail.com

- 1-845-661-6818

- GitHub

About me

I am a Machine Learning Researcher with a PhD in efficient distributed artificial intelligence & years of industry experience.

I prioritize the cost-efficiency and scalability of AI systems.

Skills

- Python - Deep learning

- C / C++ - Fast low-level computation

- Matlab - Matrix operations and visualization

- Rust - Fast web backend

- Bash - Scripting

- Java - App development

- PyTorch - Custom DL algorithms

- TensorFlow - Efficient DL algorithms

- Docker - Replicable environments

- AWS EC2 - Simulating distributed systems

- Git - Version control

- Jenkins - Software testing and verification

- Software Lead and Mentor 2016-2018

- Teaching Assistant 2018-2019

- Research Mentor 2021-2023

- IBM Research Collaborator 2020-2023

- Windows

- Ubuntu

- CentOS

- MacOS

- Raspbian

- Manjaro

- GNU/Linux

Work Experience

IBM Research

Summer 2022

Research Extern

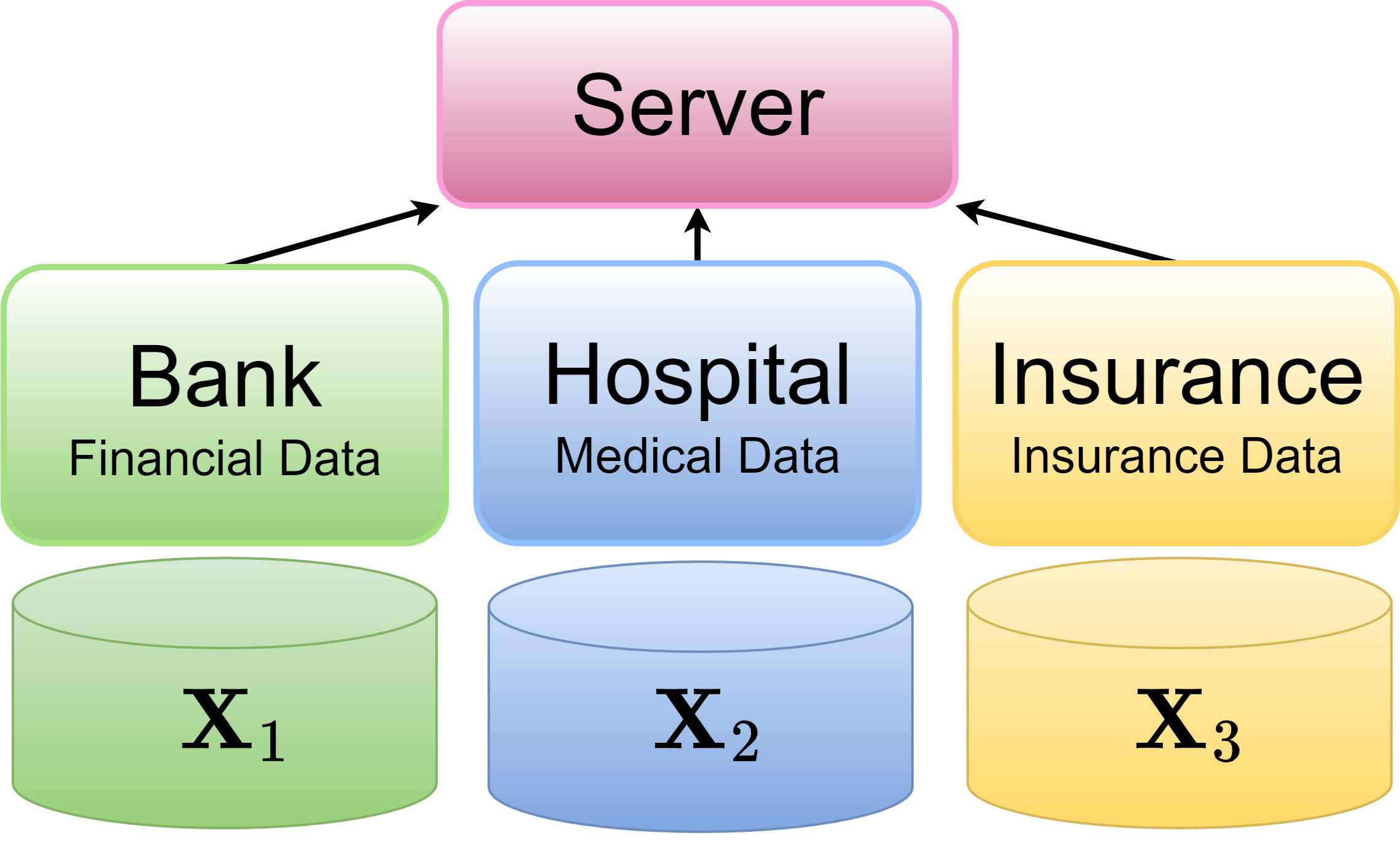

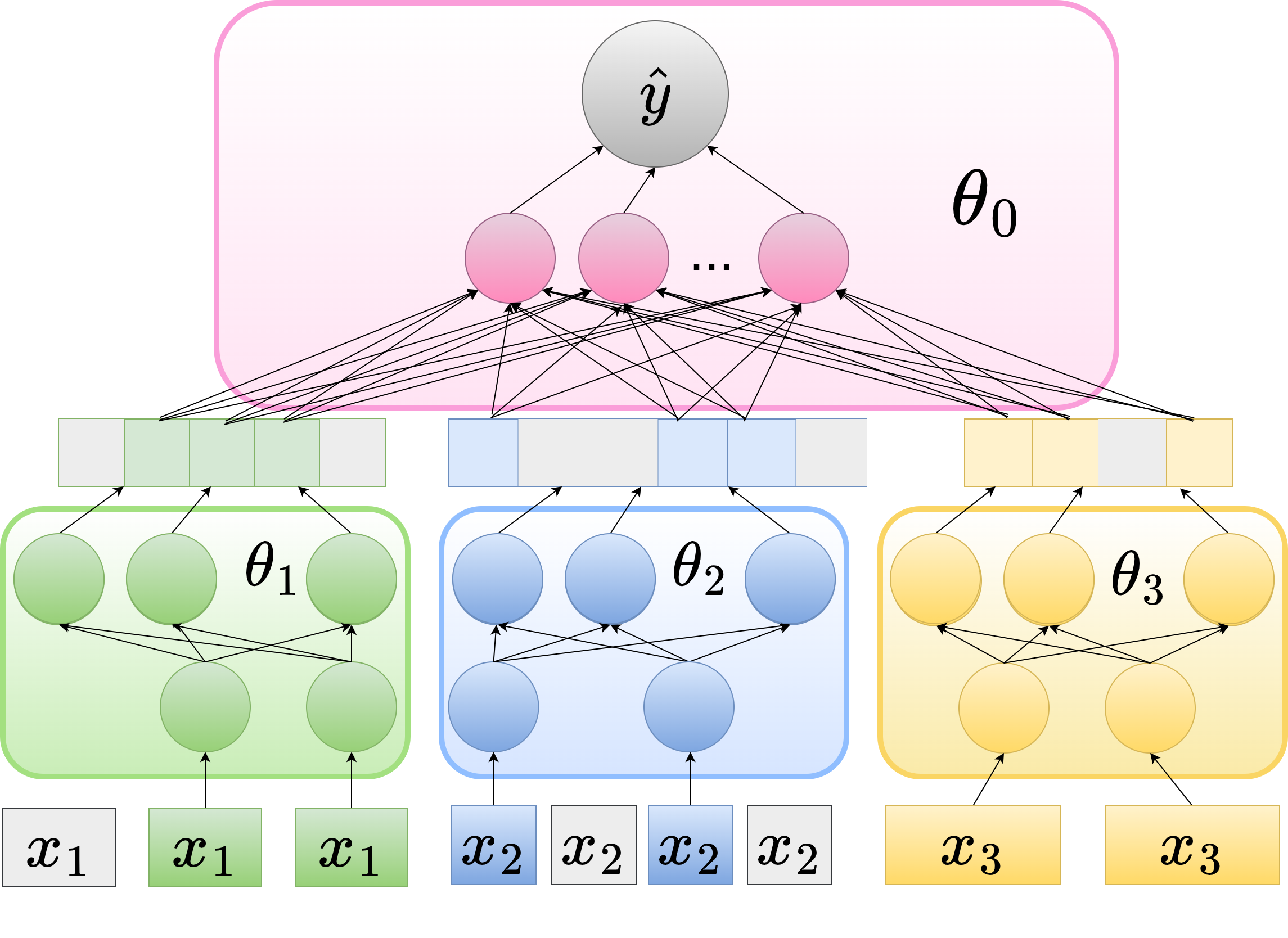

Designed novel algorithm of feature selection for deep neural networks in Vertical Federated Learning settings without sharing private features. Verified method using PyTorch and Tensorflow in IBM's Federated Learning library, demonstrated it can reduce computation and communication cost. Filed patent and first author on paper published in ICML 2023.

IBM Research

Summer 2021

Research Extern

Studied Vertical Federated Learning in collaboration with several researchers at IBM. Tackled several unsolved problems, including theoretical convergence with message compression, viability of privacy-preserving techniques, and flexibility of participant model updates. Experiments required extensive use of PyTorch and Tensorflow in HPC systems. Filed patent and first author on paper published in ICML 2022.

Black River Systems

Summer 2018

Software Engineer

Created back-end for several modules, written in Rust, for a system to monitor mission-critical equipment.

Critical Technologies

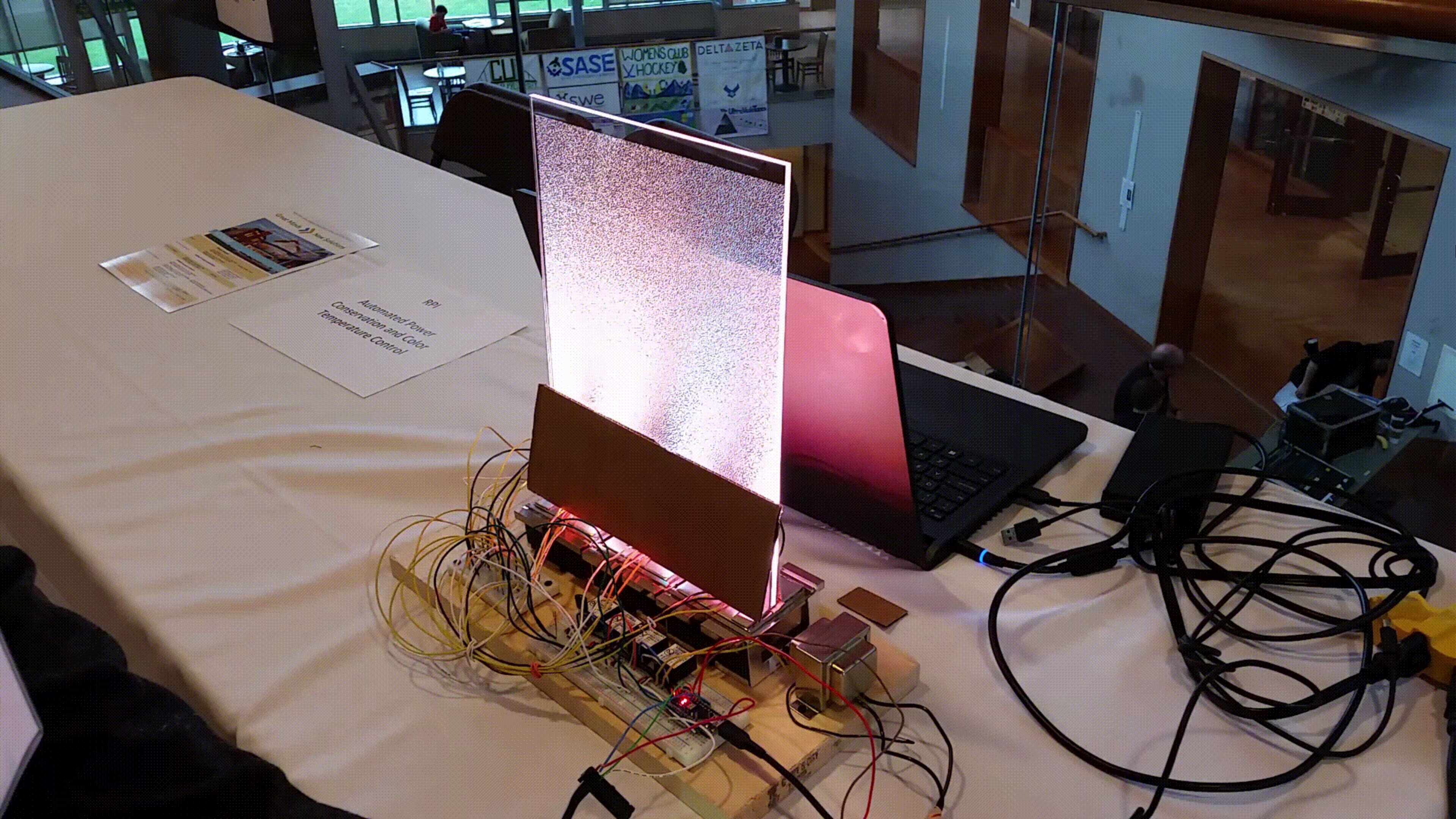

2016-2018

Lead Developer

Software lead of an online Spectrum Analyzer for analyzing interference in 4G networks. Application required fast streaming of spectra (∼ 5000 traces/second) from FPGA to disk through C++ interface, and streaming from Python backend to web front-end application. Led integration of hardware and software aspects of the product and mentored new software developers on the team.

Education

Rensselaer Polytechnic Institute

2018 - 2023

Doctor of Philosophy (PhD) in Computer Science

Rensselaer Polytechnic Institute

2013-2017

Bachelor of Science in Computer Science and Computer Engineering

Gallery

Publications

Published in ICML 2023

T. Castiglia, Y. Zhou, S.Wang, S. Kadhe, N. Baracaldo, and S. Patterson

LESS-VFL: Communication-Efficient Feature Selection for Vertical Federated Learning

We propose LESS-VFL, a communication-efficient feature selection method for distributed systems with vertically partitioned data. We consider a system of a server and several parties with local datasets that share a sample ID space but have different feature sets. The parties wish to collaboratively train a model for a prediction task. As part of the training, the parties wish to remove unimportant features in the system to improve generalization, efficiency, and explainability. In LESS-VFL, after a short pre-training period, the server optimizes its part of the global model to determine the relevant outputs from party models. This information is shared with the parties to then allow local feature selection without communication. We analytically prove that LESS-VFL removes spurious features from model training. We provide extensive empirical evidence that LESS-VFL can achieve high accuracy and remove spurious features at a fraction of the communication cost of other feature selection approaches.

Published in NeurIPS 2022 Workshop

T. Castiglia, S. Wang, and S. Patterson

Self-Supervised Vertical Federated Learning

We consider a system where parties store vertically-partitioned data with a partially overlapping sample space, and a server stores labels on a subset of data samples. Supervised Vertical Federated Learning (VFL) algorithms are limited to training models using only overlapping labeled data, which can lead to poor model performance or bias. Self-supervised learning has been shown to be effective for training on unlabeled data, but the current methods do not generalize to the vertically-partitioned setting. We propose a novel extension of self-supervised learning to VFL (SS-VFL), where unlabeled data is used to train representation networks and labeled data is used to train a downstream prediction network. We present two SS-VFL algorithms: SS-VFL-I is a two-phase algorithm which requires only one round of communication, while SS-VFL-C adds communication rounds to improve model generalization. We show that both SS-VFL algorithms can achieve up to 2x higher accuracy than supervised VFL when labeled data is scarce at a significantly reduced communication cost.

Under review

T. Castiglia, S. Wang, and S. Patterson

Flexible Vertical Federated Learning with Heterogeneous Parties

We propose Flexible Vertical Federated Learning (Flex-VFL), a distributed machine algorithm that trains a smooth, non-convex function in a distributed system with vertically partitioned data. We consider a system with several parties that wish to collaboratively learn a global function. Each party holds a local dataset; the datasets have different features but share the same sample ID space. The parties are heterogeneous in nature: the parties' operating speeds, local model architectures, and optimizers may be different from one another and, further, they may change over time. To train a global model in such a system, Flex-VFL utilizes a form of parallel block coordinate descent, where parties train a partition of the global model via stochastic coordinate descent. We provide theoretical convergence analysis for Flex-VFL and show that the convergence rate is constrained by the party speeds and local optimizer parameters. We apply this analysis and extend our algorithm to adapt party learning rates in response to changing speeds and local optimizer parameters. Finally, we compare the convergence time of Flex-VFL against synchronous and asynchronous VFL algorithms, as well as illustrate the effectiveness of our adaptive extension.

Published in ICML 2022

T. Castiglia, A. Das, S. Wang, and S. Patterson

C-VFL: Communication-Efficient Learning with Vertically Partitioned Data

We propose Compressed Vertical Federated Learning (C-VFL) for communication-efficient training on vertically partitioned data. In C-VFL, a server and multiple parties collaboratively train a model on their respective features utilizing several local iterations and sharing compressed intermediate results periodically. Our work provides the first theoretical analysis of the effect message compression has on distributed training over vertically partitioned data. We prove convergence of non-convex objectives at a rate ofO(1/sqrt(T)) when the compression error is bounded over the course of training. We provide specific requirements for convergence with common compression techniques, such as quantization and top-k sparsification. Finally, we experimentally show compression can reduce communication by over 90% without a significant decrease in accuracy over VFL without compression.

Published in ACM Transactions on Intelligent Systems and Technology 2022

A. Das, T. Castiglia, S.Wang, and S. Patterson

Cross-Silo Federated Learning for Multi-Tier Networks with Vertical and Horizontal Partitioning

We consider federated learning in tiered communication networks. Our network model consists of a set of silos, each holding a vertical partition of the data. Each silo contains a hub and a set of clients, with the silo’s vertical data shard partitioned horizontally across its clients. We propose Tiered Decentralized Coordinate Descent (TDCD), a communication-efficient decentralized training algorithm for such two-tiered networks. The clients in each silo perform multiple local gradient steps before sharing updates with their hub to reduce communication overhead. Each hub adjusts its coordinates by averaging its workers’ updates, and then hubs exchange intermediate updates with one another. We present a theoretical analysis of our algorithm and show the dependence of the convergence rate on the number of vertical partitions and the number of local updates. We further validate our approach empirically via simulation-based experiments using a variety of datasets and objectives.

Published in ICLR 2021

T. Castiglia, A. Das, and S. Patterson

Multi-level local SGD: Distributed SGD for Heterogeneous Hierarchical Networks

We propose Multi-Level Local SGD, a distributed stochastic gradient method for learning a smooth, non-convex objective in a multi-level communication network with heterogeneous workers. Our network model consists of a set of disjoint sub-networks, with a single hub and multiple workers; further, workers may have different operating rates. The hubs exchange information with one another via a connected, but not necessarily complete communication network. In our algorithm, sub-networks execute a distributed SGD algorithm, using a hub-and-spoke paradigm, and the hubs periodically average their models with neighboring hubs. We first provide a unified mathematical framework that describes the Multi-Level Local SGD algorithm. We then present a theoretical analysis of the algorithm; our analysis shows the dependence of the convergence error on the worker node heterogeneity, hub network topology, and the number of local, sub-network, and global iterations. We illustrate the effectiveness of our algorithm in a multi-level network with slow workers via simulation-based experiments.

Published in IEEE Transactions on Control of Network Systems 2021

Y. Yi, T. Castiglia, and S. Patterson

Shifting Opinions in a Social Network Through Leader Selection

We study the French-DeGroot opinion dynamics in a social network with two polarizing parties. We consider a network in which the leaders of one party are given, and we pose the problem of selecting the leader set of the opposing party so as to shift the average opinion to a desired value. When each party has only one leader, we express the average opinion in terms of the transition matrix and the stationary distribution of random walks in the network. The analysis shows balance of influence between the two leader nodes. We show that the problem of selecting at most k absolute leaders to shift the average opinion is NP-hard. Then, we reduce the problem to a problem of submodular maximization with a submodular knapsack constraint and an additional cardinality constraint and propose a greedy algorithm with upper bound search to approximate the optimum solution. We also conduct experiments in random networks and real-world networks to show the effectiveness of the algorithm.

Published in ICDCS 2020

T. Castiglia, C. Goldberg, and S. Patterson

A Hierarchical Model for Fast Distributed Consensus in Dynamic Networks

State machine replication is a foundational tool that is used to provide availability and fault tolerance in distributed systems. Safe replication requires a consensus algorithm as a method to achieve agreement on the order of system updates. Designing algorithms that are safe while retaining high throughput is imperative for today's systems. Two of the most widely adopted consensus algorithms, Paxos and Raft, have received much attention and use in industry. While both Paxos and Raft provide safe specification for maintaining a replicated log of system updates, Raft aims for ease of understandability and implementation.

Published in IEEE Conference on Decision and Control 2019

T. Castiglia and S. Patterson

Distributed Submodular Maximization with Bounded Communication Cost

We study distributed submodular maximization in networks with heterogeneous communication costs. In the distributed maximization algorithm, each agent selects a strategy from a discrete set of options, and the objective is to maximize a global submodular function over these strategies. The network topology imposes limitations on information sharing, and several recent works have derived bounds on the performance of the algorithm in terms of graph properties. In this work, we consider the problem of designing a network that maximizes the algorithm performance subject to a bound on the total communication cost of the algorithm execution. We first prove that this network design problem is NP-hard. We then present an approximation algorithm for it. Next, we show that the algorithm communication cost can be further reduced by using multi-hop routing for information propagation. We give a polynomial-time algorithm that finds the optimal information propagation scheme for the distributed algorithm in edge-weighted networks. Finally, we present experimental results highlighting the performance of our algorithms.

Patents

T. Castiglia, Y. Zhou, S. Wang, S. Kadhe, N. Baracaldo, and S. Patterson

A System and Method to Perform Feature Selection in Vertical Federated Learning

T. Castiglia, A. Das, S. Wang, and S. Patterson

Vertical Federated Learning with Compressed Embeddings

T. Castiglia, S. Wang, and S. Patterson

Semi-Supervised Vertical Federated Learning

Vertical Federated Learning with Secure Aggregation

Portfolio & CV

Use the template as-is or try different colors and fonts from the options below.

Try Other Colors

Try Other Fonts

- Barlow Semi Condensed/Barlow Semi Condensed

- Aa

- Aa

- Aa

- Aa

- Aa

- Aa

- Aa

- Aa